Google-Forscher präsentiert Highlights im Gebiet Computer Vision auf CAI-Kolloquium | ZHAW Zürcher Hochschule für Angewandte Wissenschaften

Google Leverages Transformers to Vastly Simplify Neural Video Compression With SOTA Results | Synced

Google AI Introduces OptFormer: The First Transformer-Based Framework For Hyperparameter Tuning - MarkTechPost

Google Research Proposes an Artificial Intelligence (AI) Model to Utilize Vision Transformers on Videos - MarkTechPost

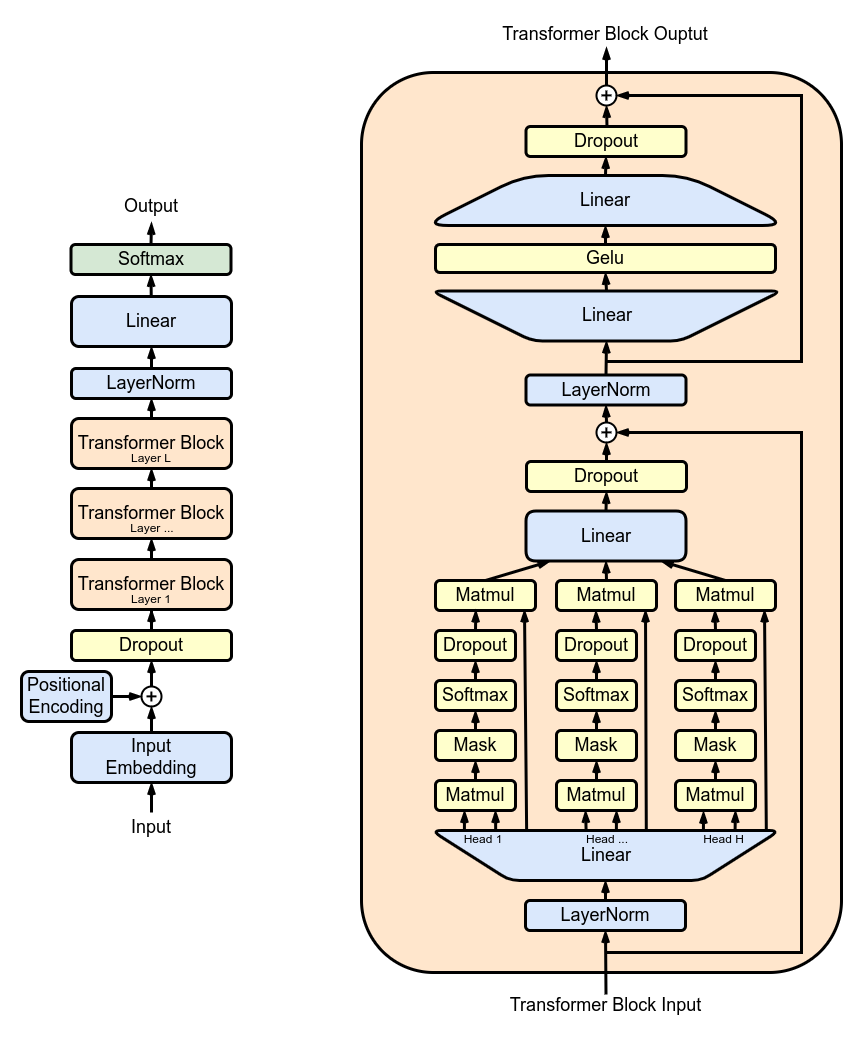

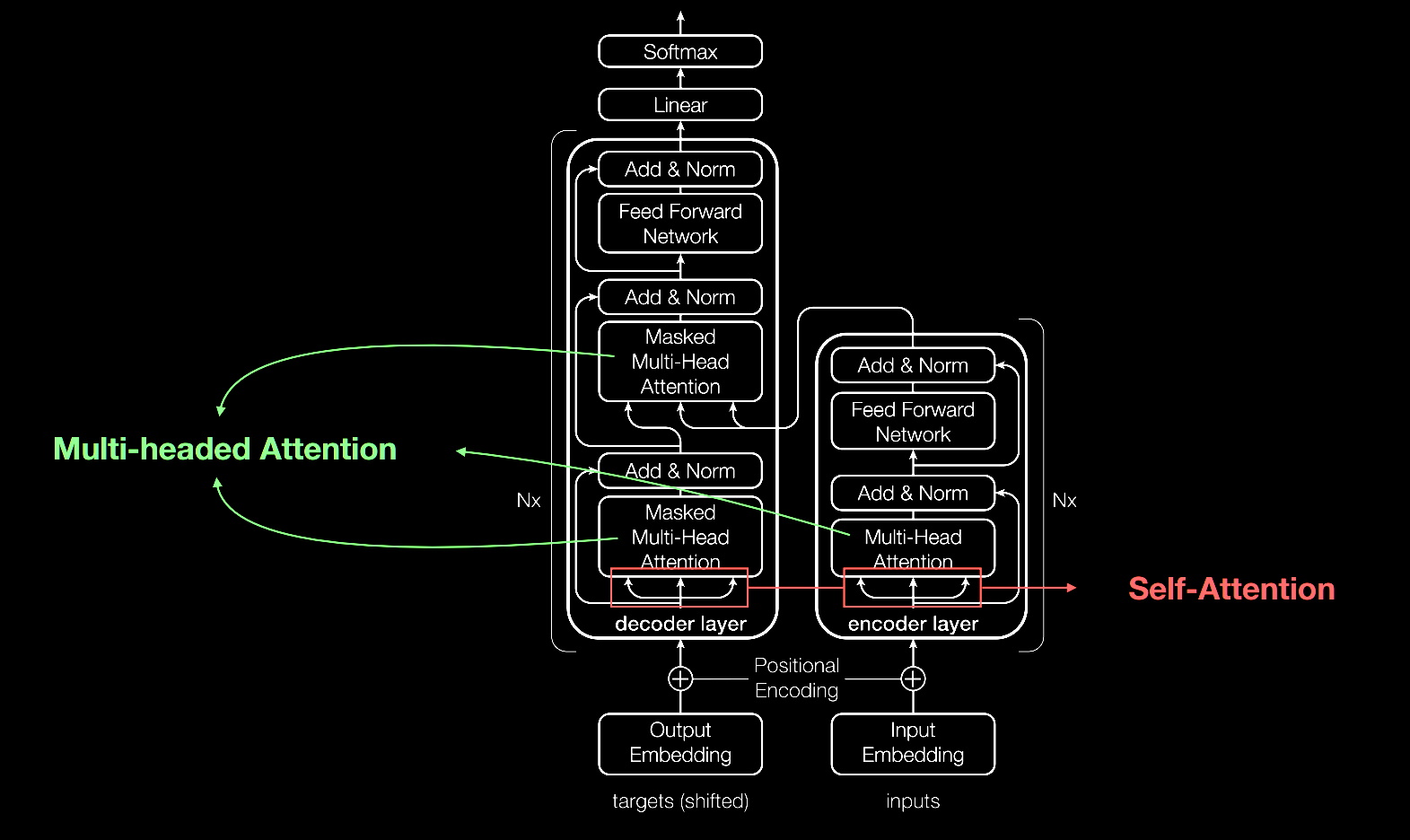

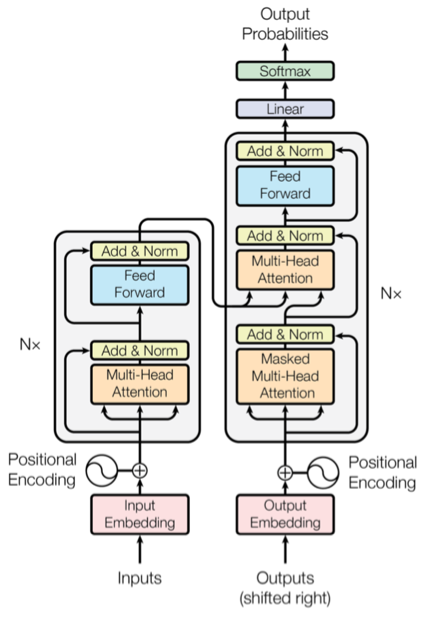

Architecture of the Transformer (Vaswani et al., 2017). We apply the... | Download Scientific Diagram

Google Researchers Use Different Design Decisions To Make Transformers Solve Compositional NLP Tasks - MarkTechPost